Coordinate System

A coordinate system allows one to place points on a plane in a precise way. In other words, each point in the plane is given a precise manner of specifying their location. The most useful coordinate system is called rectangular coordinates system (also known as Cartesian coordinate system), and Polar Coordinate system.

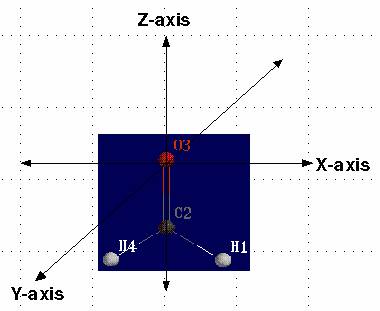

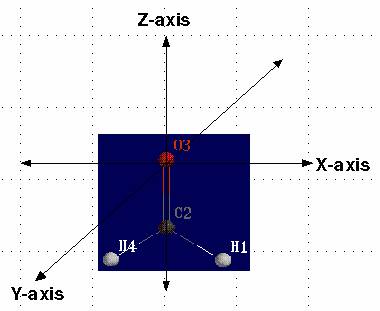

To define the atomic positions, numbers are needed - a set of coordinates. These may be external coordinates, referred to some set of coordinate axes, or they may be internal coordinates, defining the various interatomic distances and angles in the molecule of interest.

The geometry of a molecule can be described using one of three different methods.

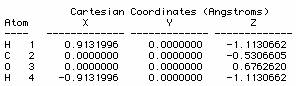

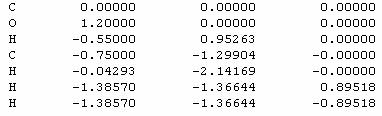

The first is by using cartesian coordinates. Using the x-y-z coordinate system, the scientist must identify the coordinates for each atom in the molecule. However, this method is only efficient for small molecules.

The second method uses a molecular editor or graphical user interface (GUI). These are computer programs which allow you to construct various molecules. The program then automatically calculates the geometry of the molecule. GUIs work well for larger molecules.

The third method is called a Z-Matrix. The Z-Matrix is a simple, but rough, geometrical approximation. It works by identifying each atom in a molecule by a bond distance, bond angle and dihedral angle in relation to other atoms in the molecule. Z-Matrices work well for large molecules because the Z-Matrix can be easily converted to cartesian coordinates using Shodor's Z-Matrix Conversion Tool.

A dihedral angle is formed from four atoms, and helps to define the dimensionality of the molecule.

In chemistry, the Z-matrix is a way to represent a system built of atoms. A Z-matrix is also known as an internal coordinate representation. It provides a description of each atom in a molecule in terms of its atomic number, bond length, bond angle, and dihedral angle, the so-called internal coordinates, although it is not always the case that a Z-matrix will give information regarding bonding since the matrix itself is based on a series of vectors describing atomic orientations in space. However, it is convenient to write a Z-matrix in terms of bond lengths, angles, and dihedrals since this will preserve the actual bonding characteristics. The name arises because the Z-matrix assigns the second atom along the Z-axis from the first atom, which is at the origin.

Z-matrices can be converted to cartesian coordinates and back, as the information content is identical. They are used for creating input geometries for molecular systems in many molecular modelling and computational chemistry programs. A skillful choice of internal coordinates can make the interpretation of results straightforward. Also, since Z-matrices can contain molecular connectivity information (but do not always contain this information), quantum chemical calculations such as geometry optimization may be performed faster, because an educated guess is available for an initial Hessian matrix, and more natural internal coordinates are used rather than Cartesian coordinates. The Z-matrix representation is often preferred, because this allows symmetry to be enforced upon the molecule (or parts thereof) by setting certain angles as constant.

When constructing a Z-matrix, you should follow these steps:

Use this page to create a Z-matrix and convert it to Cartesian Coordinates for use in the ChemViz Program. This form permits you to convert a Z-matrix composed of 3 to 25 atoms. If you have more than 25 atoms, you should consider the use of a molecular editor. It can also be located at http://www.shodor.org/chemviz/babelex.html and http://www.shodor.org/chemviz/

The methane molecule can be described by the following cartesian coordinates (in Ångströms):

C 0.000000 0.000000 0.000000

H 0.000000 0.000000 1.089000

H 1.026719 0.000000 -0.363000

H -0.513360 -0.889165 -0.363000

H -0.513360 0.889165 -0.363000

The corresponding Z-matrix, which starts from the carbon atom, could look like this:

C

H 1 1.089000

H 1 1.089000 2 109.4710

H 1 1.089000 2 109.4710 3 120.0000

H 1 1.089000 2 109.4710 3 -120.0000

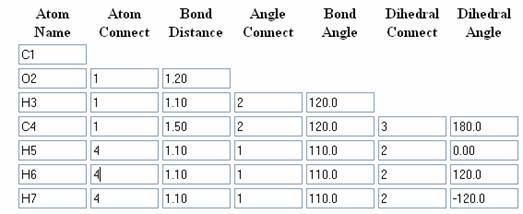

Atom Name, Atom Connect, Bond Distance, Angle Connect, Bond Angle, Dihedral Connect, Dihedral Angle

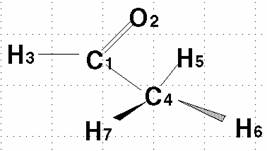

Acetaldehyde:

Building up of Acetaldehyde Molecule:

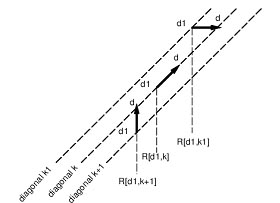

Here is the numbered molecule. Use this as a reference when following along in the example. Notice that the black arrow means that atom H7 is coming out of the screen and towards you. The shaded arrow H6 is going into the monitor.

These pictures show the five atoms that make up one plane. The included atoms are both carbons, the oxygen, and two hydrogens, each from opposite ends. This geometry is important when finding the dihedral angles.

|

C1 |

|

C1 |

|

|

|

O2 |

1 |

1.22 |

|

C1 |

|

|

|

|

|

O2 |

1 |

1.22 |

|

|

|

H3 |

1 |

1.09 |

2 |

120.0 |

|

C1 |

|

|

|

|

|

|

|

O2 |

1 |

1.22 |

|

|

|

|

|

H3 |

1 |

1.09 |

2 |

120.0 |

|

|

|

C4 |

1 |

1.54 |

2 |

120.0 |

3 |

180.0 |

|

C1 |

|

|

|

|

|

|

|

O2 |

1 |

1.22 |

|

|

|

|

|

H3 |

1 |

1.09 |

2 |

120.0 |

|

|

|

C4 |

1 |

1.54 |

2 |

120.0 |

3 |

180.0 |

|

H5 |

4 |

1.09 |

1 |

110.0 |

2 |

000.0 |

|

C1 |

|

|

|

|

|

|

|

O2 |

1 |

1.22 |

|

|

|

|

|

H3 |

1 |

1.09 |

2 |

120.0 |

|

|

|

C4 |

1 |

1.54 |

2 |

120.0 |

3 |

180.0 |

|

H5 |

4 |

1.09 |

1 |

110.0 |

2 |

000.0 |

|

H6 |

4 |

1.09 |

1 |

110.0 |

2 |

120.0 |

|

C1 |

|

|

|

|

|

|

|

O2 |

1 |

1.22 |

|

|

|

|

|

H3 |

1 |

1.09 |

2 |

120.0 |

|

|

|

C4 |

1 |

1.54 |

2 |

120.0 |

3 |

180.0 |

|

H5 |

4 |

1.09 |

1 |

110.0 |

2 |

000.0 |

|

H6 |

4 |

1.09 |

1 |

110.0 |

2 |

120.0 |

|

H7 |

4 |

1.09 |

1 |

110.0 |

2 |

-120.0 |

Cartesian coordinate system

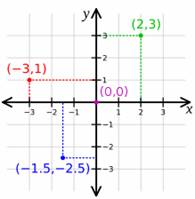

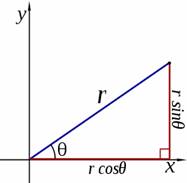

A Cartesian coordinate system specifies each point uniquely in a plane by a pair of numerical coordinates, which are the signed distances from the point to two fixed perpendicular directed lines, measured in the same unit of length.

Each reference line is called a coordinate axis or just axis of the system, and the point where they meet is its origin. The coordinates can also be defined as the positions of the perpendicular projections of the point onto the two axes, expressed as a signed distances from the origin.

Choosing a Cartesian coordinate system for a plane means choosing an ordered pair of lines (axes) at right angles to each other, a single unit of length for both axes, and an orientation for each axis. The point where the axes meet is taken as the origin for both axes, thus turning each axis into a number line. Each coordinate of a point p is obtained by drawing a line through p perpendicular to the associated axis, finding the point q where that line meets the axis, and interpreting q as a number of that number line.

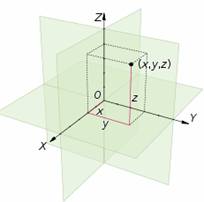

Choosing a Cartesian coordinate system for a three-dimensional space means choosing an ordered triplet of lines (axes), any two of them being perpendicular; a single unit of length for all three axes; and an orientation for each axis. As in the two-dimensional case, each axis becomes a number line. The coordinates of a point p are obtained by drawing a line through p perpendicular to each axis, and reading the points where these lines meet the axes as three numbers of these number lines.

Alternatively, the coordinates of a point p can also be taken as the (signed) distances from p to the three planes defined by the three axes. If the axes are x, y, and z, then the x coordinate is the distance from the plane defined by the y and z axes. The distance is to be taken with the + or − sign, depending on which of the two half-spaces separated by that plane contains p. The y and z coordinates can be obtained in the same way from the (x,z) and (x,y) planes, respectively.

One can use the same principle to specify the position of any point in three-dimensional space by three Cartesian coordinates, its signed distances to three mutually perpendicular planes (or, equivalently, by its perpendicular projection onto three mutually perpendicular lines). In general, one can specify a point in space of any dimension n by use of n Cartesian coordinates, the signed distances from n mutually perpendicular hyperplanes.

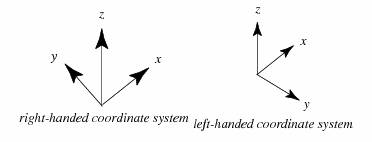

Once the x- and y-axes are specified, they determine the line along which the z-axis should lie, but there are two possible directions on this line. The two possible coordinate systems which result are called 'right-handed' and 'left-handed'. The standard orientation, where the xy-plane is horizontal and the z-axis points up (and the x- and the y-axis form a positively oriented two-dimensional coordinate system in the xy-plane if observed from above the xy-plane) is called right-handed or positive.

Cartesian coordinates are the foundation of analytic geometry, and provide enlightening geometric interpretations for many other branches of mathematics, such as linear algebra, complex analysis, differential geometry, multivariate calculus, group theory, and more. A familiar example is the concept of the graph of a function. Cartesian coordinates are also essential tools for most applied disciplines that deal with geometry, including astronomy, physics, engineering, and many more. They are the most common coordinate system used in computer graphics, computer-aided geometric design, and other geometry-related data processing.

Each axis may have different units of measurement associated with it (such as kilograms, seconds, pounds, etc.). Although four- and higher-dimensional spaces are difficult to visualize, the algebra of Cartesian coordinates can be extended relatively easily to four or more variables, so that certain calculations involving many variables can be done. (This sort of algebraic extension is what is used to define the geometry of higher-dimensional spaces.) Conversely, it is often helpful to use the geometry of Cartesian coordinates in two or three dimensions to visualize algebraic relationships between two or three of many non-spatial variables.

The graph of a function or relation is the set of all points satisfying that function or relation. For a function of one variable, f, the set of all points (x,y) where y = f(x) is the graph of the function f. For a function of two variables, g, the set of all points (x,y,z) where z = g(x,y) is the graph of the function g. A sketch of the graph of such a function or relation would consist of all the salient parts of the function or relation which would include its relative extrema, its concavity and points of inflection, any points of discontinuity and its end behavior. All of these terms are more fully defined in calculus. Such graphs are useful in calculus to understand the nature and behavior of a function or relation.

Polar Coordinates:

The usual Cartesian coordinate system can be quite difficult to use in certain situations. Some of the most common situations when Cartesian coordinates are difficult to employ involve those in which circular, cylindrical, or spherical symmetry is present. For these situations it is often more convenient to use a different coordinate system.

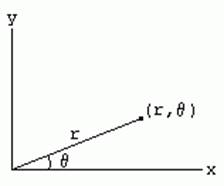

In mathematics, the polar coordinate system is a two-dimensional coordinate system in which each point on a plane is determined by a distance from a fixed point and an angle from a fixed direction.

The fixed point (analogous to the origin of a Cartesian system) is called the pole, and the ray from the pole with the fixed direction is the polar axis. The distance from the pole is called the radial coordinate or radius, and the angle is the angular coordinate, polar angle, or azimuth.

It is common to represent the point by an ordered pair (r,theta). Using standard trigonometry we can find conversions from Cartesian to polar coordinates

![]()

and from polar to Cartesian coordinates

![]()

There are two common methods for extending the polar coordinate system to three dimensions. In the cylindrical coordinate system, a z-coordinate with the same meaning as in Cartesian coordinates is added to the r and θ polar coordinates. Spherical coordinates take this a step further by converting the pair of cylindrical coordinates (r, z) to polar coordinates (ρ, φ) giving a triple (ρ, θ, φ).

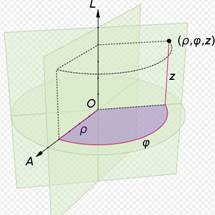

Cylindrical Polar Coordinate system:

A cylindrical coordinate system is a three-dimensional coordinate system, where each point is specified by the two polar coordinates of its perpendicular projection onto some fixed plane, and by its (signed) distance from that plane.

The polar coordinates may be called the radial distance or radius, and the angular position or azimuth, respectively. The third coordinate may be called the height or altitude (if the reference plane is considered horizontal), longitudinal position, or axial position. The line perpendicular to the reference plane that goes through its origin may be called the cylindrical axis or longitudinal axis.

Cylindrical coordinates are useful in connection with objects and phenomena that have some rotational symmetry about the longitudinal axis, such as water flow in a straight pipe with round cross-section, heat distribution in a metal cylinder, and so on.

The three coordinates (ρ, φ, z) of a point P are defined as:

Cylindrical coordinates are obtained by replacing the x and y coordinates with the polar coordinates r and theta (and leaving the z coordinate unchanged).

Thus, we have the following relations between Cartesian and cylindrical coordinates:

From cylindrical to Cartesian:

![]()

From Cartesian to cylindrical:

![]()

Coordinate range

– 0 ≤rc < ∞

0 ≤ θc ≤ 2π

– ∞ < z < ∞

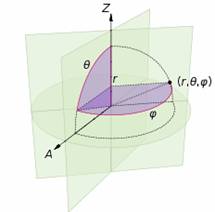

Spherical Polar Coordinate system:

In mathematics, a spherical coordinate system is a coordinate system for three-dimensional space where the position of a point is specified by three numbers: the radial distance of that point from a fixed origin, its inclination angle measured from a fixed zenith direction, and the azimuth angle of its orthogonal projection on a reference plane that passes through the origin and is orthogonal to the zenith, measured from a fixed reference direction on that plane. The inclination angle is often replaced by the elevation angle measured from the reference plane.

The radial distance is also called the radius or radial coordinate, and the inclination may be called colatitude, zenith angle, normal angle, or polar angle.

In geography and astronomy, the elevation and azimuth (or quantities very close to them) are called the latitude and longitude, respectively; and the radial distance is usually replaced by an altitude (measured from a central point or from a sea level surface).

The concept of spherical coordinates can be extended to higher dimensional spaces and are then referred to as hyperspherical coordinates.

To define a spherical coordinate system, one must choose two orthogonal directions, the zenith and the azimuth reference, and an origin point in space. These choices determine a reference plane that contains the origin and is perpendicular to the zenith. The spherical coordinates of a point P are then defined as follows:

The sign of the azimuth is determined by choosing what is a positive sense of turning about the zenith. This choice is arbitrary, and is part of the coordinate system's definition.

The elevation angle is 90 degrees (π/2 radians) minus the inclination angle.

If the inclination is zero or 180 degrees (π radians), the azimuth is arbitrary. If the radius is zero, both azimuth and inclination are arbitrary.

In linear algebra, the vector from the origin O to the point P is often called the position vector of P.

The geographic coordinate system uses the azimuth and elevation of the spherical coordinate system to express locations on Earth, calling them respectively longitude and latitude. Just as the two-dimensional Cartesian coordinate system is useful on the plane, a two-dimensional spherical coordinate system is useful on the surface of a sphere. In this system, the sphere is taken as a unit sphere, so the radius is unity and can generally be ignored. This simplification can also be very useful when dealing with objects such as rotational matrices.

The spherical coordinate system is also commonly used in 3D game development to rotate the camera around the player's position.

The coordinates used in spherical coordinates are rho, theta, and phi. Rho is the distance from the origin to the point. Theta is the same as the angle used in polar coordinates. Phi is the angle between the z-axis and the line connecting the origin and the point.

The following are the relations between Cartesian and spherical coordinates:

From spherical to Cartesian:

![]()

From Cartesian to spherical:

![]()

Relations between cylindrical and spherical coordinates also exist:

From spherical to cylindrical:

![]()

From cylindrical to spherical:

Coordinate range:

0 ≤rs < ∞

0 ≤θs ≤ π

0 ≤φ ≤ 2π

MODELING SMALL MOLECULES

Small molecules can be modeled using co-ordinate system. Both Cartesian and polar co-ordinate systems were used to model the biomolecules. Especially the model of molecules depends upon the co-ordinates utilized in the co-ordinate system.

Model of a molecule can be visualized using molecular visualization tools. Example: Rasmol, Molmol, Swiss-PDB Viewer.

Rasmol: http://rasmol.org/

Molmol: http://hugin.ethz.ch/wuthrich/software/molmol/index.html

SPDBViewer: http://spdbv.vital-it.ch/

These visualization tools are free for academic purpose. One can download these softwares from their respective websites and can install in their computer. Molecules can be visualized through the installed programs.

These programs used to convert the co-ordinates in the structure file into the graphical display form. Different forms of display for example CPK model, ribbon model, wire model, stick model and Ball and stick model etc., are available. For all these display methods, the basic information was taken from the defined internal co-ordinates.

Usually structure files possess Cartesian co-ordinates. So these programs can simply help to view Cartesian co-ordinates in graphical form. Mostly PDB file format used for molecular structure. PDF format contains Cartesian co-ordinates x, y, z values which can be utilized for visualization purpose.

If the structure file present in other co-ordinate forms, for example, polar co-ordinate form, then these co-ordinate values first converted into cartesina co-ordinates and then be used for visualization purpose.

The methane molecule can be described by the following cartesian coordinates (in Ångströms):

C 0.000000 0.000000 0.000000

H 0.000000 0.000000 1.089000

H 1.026719 0.000000 -0.363000

H -0.513360 -0.889165 -0.363000

H -0.513360 0.889165 -0.363000

The corresponding Z-matrix, which starts from the carbon atom, could look like this:

C

H 1 1.089000

H 1 1.089000 2 109.4710

H 1 1.089000 2 109.4710 3 120.0000

H 1 1.089000 2 109.4710 3 -120.0000

Atom Name, Atom Connect, Bond Distance, Angle Connect, Bond Angle, Dihedral Connect, Dihedral Angle

MODELLING DNA

The structure of DNA is available in different conformations namely A, B, C and Z forms. The “B” form is the most common and biologically active form. In the “B” form DNA has some constant features namely the distance between residues is usually (tr) 3.4Ao; Number of residues per turn (pitch) is 10, the distance (length) is 34Ao, the angle tilt of the bases in DNA is 36o (tw). The bases are usually perpendicular to the helix axis (z-axis or dyad axis). The diameter of the helical structure is 20A. Two strands are antiparallel to each other.

For generation of standard DNA structures, with the helical symmetry, in addition to building blocks only two parameters are needed, viz. the base pair rise tr- which is the distance between the successive base pairs along the helical axis and the twist tw- which is the angle of rotation of the following base pair with respect to existing one, are needed. If one of the Cartesian axes (in general Z- axis) coincides with the helical axis of the molecule, we can generate the DNA polymer using set of rules given below.

Suppose xi, yi and zi are the coordinates of the ith atom in a building block, the coordinates of the same atom in the nth residue can be obtained by:

• Rotating coordinates of all the atoms in a block by angle (n-1).tw by a rotational transformation,

• Followed by translation of the unit along the helix axis by an amount (n-1).tr.

If the building blocks are in the Cylindrical polar coordinate system (ri, θiand zi designating coordinates of the ith atom ) the task is easier. In this case, radius rin of ith atom in the n th residue does not change with the residue number. Angle θin becomes θi + (n-1). tw, and Zin the z coordinates of the ith atom in the nth residue becomes Zin = zi + (n-1).tr. Thus Xin, Yin and Zin coordinates of ith atom of the nth residues are:

Generation of the opposite strand, can be done by using symmetry information in DNA. In the case of A and B forms of DNA, the dyad axis is along the X-axis. The projection of the sugar-phosphate backbone on the base plane has a mirror symmetry along this axis. As a result, the coordinates of the corresponding atom on the opposite strand (Xin', Yin', Zin' ) are given by:

where zi is the z coordinate of the same atom in the building block and Xin, Yin and Zin are the coordinates of the corresponding atom on the generating strand.

If the opposite strand is to be generated using cylindrical polar coordinates, the following relationship can be used:

These can be then converted to the Cartesian coordinates using following relation:

The advantage with the cylindrical polar coordinates is, that for the molecule with the cylindrical symmetry, the successive residues could be added by increasing Zi by tr rise per residue and θi by 360°/N where N is the number of residues per turn or axial symmetry.

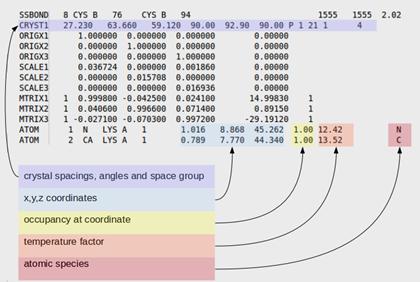

Here are a few lines from the PDB file for the DNA base pair structure directly above.

HEADER B-DNA

COMPND G-C B-DNA BASE PAIR

AUTHOR GENERATED BY GLACTONE

SEQRES 1 A 1 G

SEQRES 1 B 1 C

ATOM 1 P G A 1 -6.620 6.196 2.089

ATOM 2 OXT G A 1 -6.904 7.627 1.869

ATOM 3 O2P G A 1 -7.438 5.244 1.299

ATOM 4 O5' G A 1 -5.074 5.900 1.839

ATOM 5 C5' G A 1 -4.102 6.424 2.779

ATOM 6 C4' G A 1 -2.830 6.792 2.049

ATOM 7 O4' G A 1 -2.044 5.576 1.839

ATOM 8 C3' G A 1 -2.997 7.378 0.649

The last three columns are the XYZ coordinates of the atoms.

MODELLING PROTEINS

In contrast to DNA, proteins have wide range of structures due to the presence of different amino acid residues (20 basic amino acids). Due to the variation in monomeric residues , symmetric structures rarely occur in proteins. Protein models are represented by either Cartesian (both globular and fibrous proteins) or cylindrical (fibrous proteins) or spherical (globular proteins) co-ordinate system. Generally 3D structures of proteins were represented by using Cartesian co-ordinate system. The PDB file format is an example for Cartesian co-ordinate system (x, y, z).

When protein structure was represented by spherical co-ordinate system, three co-ordinates psi and phi angles and radius of the sphere were used for defining the structures. Especially secondary structures of polypeptide chains were usually represented by the psi and phi angles because variation in these angles leads to different secondary structures. Different secondary structures for different psi and phi angles were represented in the following table.

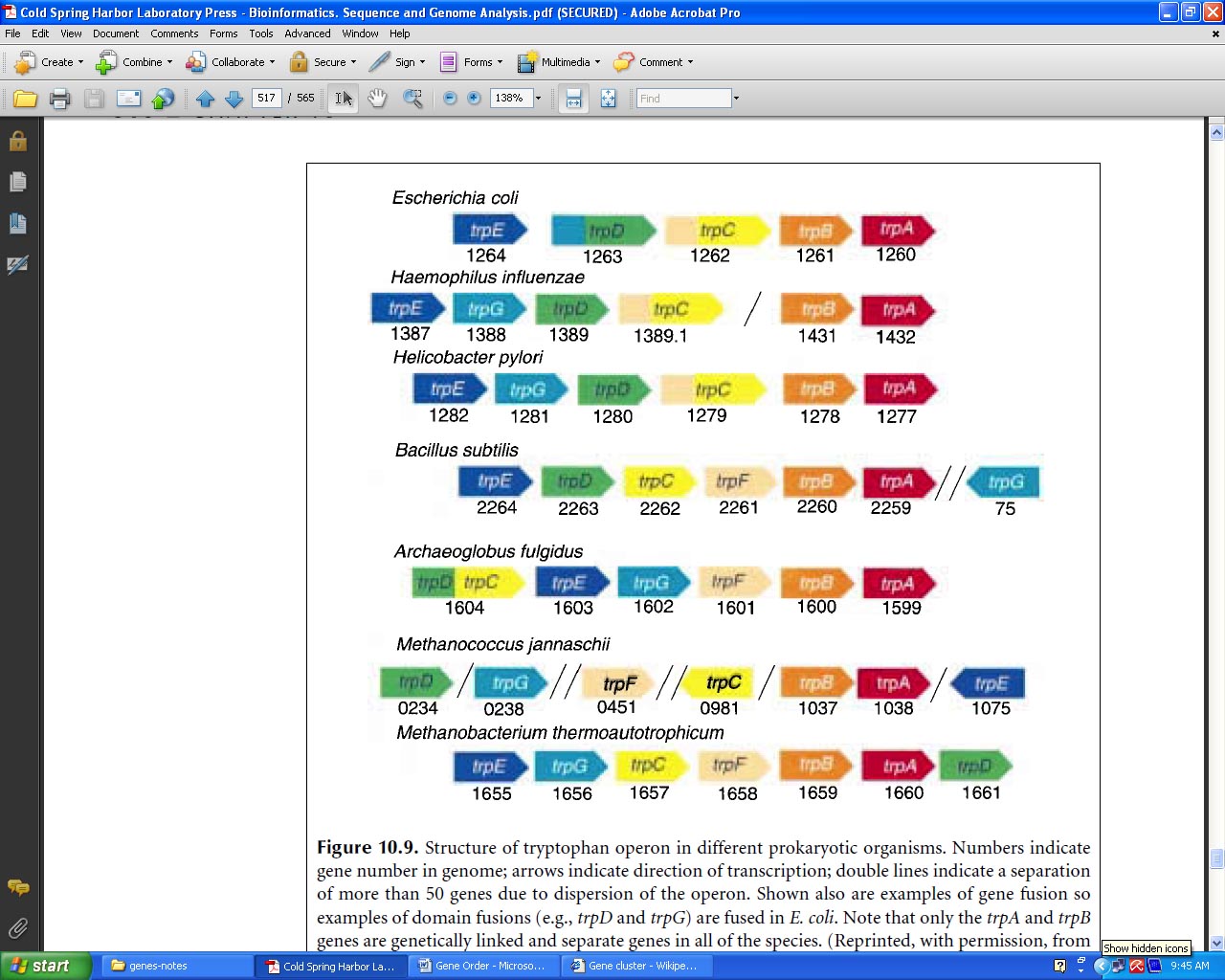

Gene Order:

A Gene order is the permutation of genome arrangement. So far a fair amount of work trying to describe whether gene orders evolve according to a molecular clock (molecular clock hypothesis) or in jumps (punctuated equilibrium). Some researches on Gene orders of animals' mitochondrial gomone reveal that the mutation rate of gene orders is not a constant in some degrees. Two important observations have been made with regard to gene order: First, order is highly conserved in closely related species but becomes changed by rearrangements over evolutionary time. Second, group of genes that have a similar biological function tend to remain localized in a group or cluster.

In classical genetics, synteny describes the physical co-localization of genetic loci on the same chromosome within an individual or species. Synteny is a neologism meaning "on the same ribbon"; Greek: σύν, syn = along with + ταινία, tainiā = band.

The concept is related to genetic linkage: Linkage between two loci is established by the observation of lower-than-expected recombination frequencies between two or more loci. In contrast, any loci on the same chromosome are by definition syntenic, even if their recombination frequency cannot be distinguished from unlinked loci by practical experiments. Thus, in theory, all linked loci are syntenic, but not all syntenic loci are necessarily linked. Similarly, in genomics, the genetic loci on a chromosome are syntenic regardless of whether this relationship can be established by experimental methods such as DNA sequencing/assembly, genome walking, physical localization or hap-mapping.

Shared synteny describes preserved co-localization of genes on chromosomes of related species. During evolution, rearrangements to the genome such as chromosome translocations may separate two loci apart, resulting in the loss of synteny between them. Conversely, translocations can also join two previously separate pieces of chromosomes together, resulting in a gain of synteny between loci. Stronger-than-expected shared synteny can reflect selection for functional relationships between syntenic genes, such as combinations of alleles that are advantageous when inherited together, or shared regulatory mechanisms.

The analysis of synteny in the gene order sense has several applications in genomics. Shared synteny is one of the most reliable criteria for establishing the orthology of genomic regions in different species. Additionally, exceptional conservation of synteny can reflect important functional relationships between genes. For example, the order of genes in the "Hox cluster", which are key determinants of the animal body plan and which interact with each other in critical ways, is essentially preserved throughout the animal kingdom. Patterns of shared synteny or synteny breaks can also be used as characters to infer the phylogenetic relationships among several species, and even to infer the genome organization of extinct ancestral species. A qualitative distinction is sometimes drawn between macrosynteny, preservation of synteny in large portions of a chromosome, and microsynteny, preservation of synteny for only a few genes at a time.

Gene Cluster:

A gene cluster is a set of two or more genes that serve to encode for the same or similar products. Because populations from a common ancestor tend to possess the same varieties of gene clusters, they are useful for tracing back recent evolutionary history. Because of this, they were used by Luigi Luca Cavalli-Sforza to identify ethnic groups within Homo sapiens and their closeness to each other.

An example of a gene cluster is the Human β-globin gene cluster, which contains five functional genes and one non-functional gene for similar proteins. Hemoglobin molecules contain any two identical proteins from this gene cluster, depending on their specific role.

Gene clusters are created by the process of gene duplication and divergence. A gene is accidentally duplicated during cell division, so that its descendants have two copies of the gene, which initially code for the same protein or otherwise have the same function. In the course of subsequent evolution, they diverge, so that the products they code for have different but related functions, with the genes still being adjacent on the chromosome. This may happen repeatedly. The process was described by Susumu Ohno in his classic book Evolution by Gene Duplication (1970).

Gene Ontology: http://www.geneontology.org/

The Gene Ontology, or GO, is a major bioinformatics initiative to unify the representation of gene and gene product attributes across all species. The aims of the Gene Ontology project are threefold; firstly, to maintain and further develop its controlled vocabulary of gene and gene product attributes; secondly, to annotate genes and gene products, and assimilate and disseminate annotation data; and thirdly, to provide tools to facilitate access to all aspects of the data provided by the Gene Ontology project.

The Gene Ontology was originally constructed in 1998 by a consortium of researchers studying the genome of three model organisms: Drosophila melanogaster (fruit fly), Mus musculus (mouse), and Saccharomyces cerevisiae (brewers' or bakers' yeast). Many other model organism databases have joined the Gene Ontology consortium, contributing not only annotation data, but also contributing to the development of the ontologies and tools to view and apply the data. As of January 2008, GO contains over 24,500 terms applicable to a wide variety of biological organisms. There is a significant body of literature on the development and use of GO, and it has become a standard tool in the bioinformatics arsenal.

The Gene Ontology project provides ontology of defined terms representing gene product properties. The ontology covers three domains; cellular component, the parts of a cell or its extracellular environment; molecular function, the elemental activities of a gene product at the molecular level, such as binding or catalysis; and biological process, operations or sets of molecular events with a defined beginning and end, pertinent to the functioning of integrated living units: cells, tissues, organs, and organisms.

Each GO term within the ontology has a term name, which may be a word or string of words; a unique alphanumeric identifier; a definition with cited sources; and a namespace indicating the domain to which it belongs. Terms may also have synonyms, which are classed as being exactly equivalent to the term name, broader, narrower, or related; references to equivalent concepts in other databases; and comments on term meaning or usage. The GO ontology is structured as a directed acyclic graph, and each term has defined relationships to one or more other terms in the same domain, and sometimes to other domains. The GO vocabulary is designed to be species-neutral, and includes terms applicable to prokaryotes and eukaryotes, single and multicellular organisms.

id: GO:0000016

name: lactase activity

namespace: molecular_function

def: "Catalysis of the reaction: lactose + H2O = D-glucose + D-galactose." [EC:3.2.1.108]

synonym: "lactase-phlorizin hydrolase activity" BROAD [EC:3.2.1.108]

synonym: "lactose galactohydrolase activity" EXACT [EC:3.2.1.108]

xref: EC:3.2.1.108

xref: MetaCyc:LACTASE-RXN

xref: Reactome:20536

is_a: GO:0004553 ! hydrolase activity, hydrolyzing O-glycosyl compounds

Genome annotation is the practice of capturing data about a gene product, and GOES annotations use terms from the GO ontology to do so.

In addition to the gene product identifier and the relevant GO term, GO annotations have the following data:

The evidence code comes from the Evidence Code Ontology, a controlled vocabulary of codes covering both manual and automated annotation methods. For example, Traceable Author Statement (TAS) means a curator has read a published scientific paper and the metadata for that annotation bears a citation to that paper; Inferred from Sequence Similarity (ISS) means a human curator has reviewed the output from a sequence similarity search and verified that it is biologically meaningful. Annotations from automated processes (for example, remapping annotations created using another annotation vocabulary) are given the code Inferred from Electronic Annotation (IEA). As these annotations are not checked by a human, the GO Consortium considers them to be less reliable and does not include them in the data available online in AmiGO. Full annotation data sets can be downloaded from the GO website.

Gene product: Actin, alpha cardiac muscle 1, UniProtKB: P68032

GO term: heart contraction ; GO:0060047 (biological process)

Evidence code: Inferred from Mutant Phenotype (IMP)

Reference: PMID:17611253

Assigned by: UniProtKB, June 06, 2008

There are a large number of tools available both online and to download that use the data provided by the GO project. The vast majority of these come from third parties; the GO Consortium develops and supports two tools, AmiGO and OBO-Edit.

AmiGO is a web-based application that allows users to query, browse and visualize ontologies and gene product annotation data. In addition, it also has a BLAST tool, tools allowing analysis of larger data sets, and an interface to query the GO database directly.

AmiGO can be used online at the GO website to access the data provided by the GO Consortium, or can be downloaded and installed for local use on any database employing the GO database schema . It is free open source software and is available as part of the go-dev software distribution.

OBO-Edit is an open source, platform-independent ontology editor

developed and maintained by the Gene Ontology Consortium. It is implemented in

Java, and uses a graph-oriented approach to display and edit ontologies.

OBO-Edit includes a comprehensive search and filter interface, with the option

to render subsets of terms to make them visually distinct; the user interface

can also be customized according to user preferences. OBO-Edit also has a

reasoner that can infer links that have not been explicitly stated, based on

existing relationships and their properties. Although it was developed for

biomedical ontologies, OBO-Edit can be used to view, search and edit any

ontology. It is freely available to download.

Plasticity

The availability of the genome map has allowed investigation of issues such as the rates of recombination per physical distance and gene density across chromosomes. Phenotypic plasticity, in biology, describes the ability of an organism to change its phenotype in response to changes in the environment. Plasticity (tissues), in body tissues, plasticity refers to the ability of differentiated cells to undergo transdifferentiation.

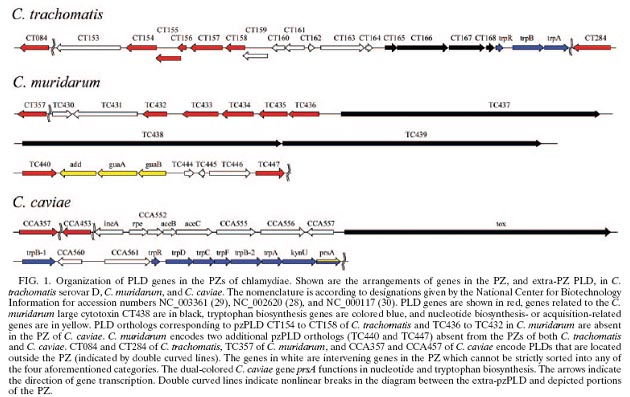

The genomes of different disease-causing C. trachomatis strains share more than 99% sequence identity. The genomes of different disease-causing C. trachomatis strains share more than 99% sequence identity. Genetic variations among these strains primarily map to a short region of their genomes termed the plasticity zone (PZ). Two strain-variable PZ gene families, the chlamydial cytotoxins and phospholipase D homologs, share homology with well-characterized virulence factors of other pathogens.

Most C. trachomatis genes are highly conserved, suggesting these "core component" genes perform critical functions. In contrast, variability in pathogenicity factors in the PZ among different C. trachomatis strains suggests these genes may be amenable to manipulation.

Members of the genus Chlamydia are obligate intracellular bacteria that are ubiquitous pathogens of mammals. Despite a broad range of host species, disease manifestations, and tissue tropisms of these organisms in nature, the genome sequences of chlamydiae are remarkably similar. Chlamydia trachomatis strains that cause distinct diseases in humans, including trachoma and chlamydial sexually transmitted diseases, as well as the more distantly related mouse pathogen Chlamydia muridarum, exhibit near genomic synteny. Comparative genomics suggests that genes necessary for the intracellular survival of chlamydiae are conserved, while genetic shift is limited to host- and tissue-specific virulence genes. Diversity in infection tropism and disease potential correlates with genes located in a hypervariable portion of the chlamydial genome termed the plasticity zone (PZ). Although previous studies have suggested virulence-related functions for many PZ genes, a large group of open reading frames encoding proteins with homology to the phospholipase D (PLD) family enzymes remain uncharacterized.

Gene Network

A gene regulatory network or genetic regulatory network (GRN) is a collection of DNA segments in a cell which interact with each other (indirectly through their RNA and protein expression products) and with other substances in the cell, thereby governing the rates at which genes in the network are transcribed into mRNA.

In general, each mRNA molecule goes on to make a specific protein (or set of proteins). In some cases this protein will be structural, and will accumulate at the cell-wall or within the cell to give it particular structural properties. In other cases the protein will be an enzyme; a micro-machine that catalyses a certain reaction, such as the breakdown of a food source or toxin. Some proteins though serve only to activate other genes, and these are the transcription factors that are the main players in regulatory networks or cascades. By binding to the promoter region at the start of other genes they turn them on, initiating the production of another protein, and so on. Some transcription factors are inhibitory.

In single-celled organisms regulatory networks respond to the external environment, optimising the cell at a given time for survival in this environment. Thus a yeast cell, finding itself in a sugar solution, will turn on genes to make enzymes that process the sugar to alcohol. This process, which we associate with wine-making, is how the yeast cell makes its living, gaining energy to multiply, which under normal circumstances would enhance its survival prospects.

In multicellular animals the same principle has been put in the service of gene cascades that control body-shape. Each time a cell divides, two cells result which, although they contain the same genome in full, can differ in which genes are turned on and making proteins. Sometimes a 'self-sustaining feedback loop' ensures that a cell maintains its identity and passes it on.

Boolean network

It provides output either in on or off state. One input and one output. The following example illustrates how a Boolean network can model a GRN together with its gene products (the outputs) and the substances from the environment that affect it (the inputs). Stuart Kauffman was amongst the first biologists to use the metaphor of Boolean networks to model genetic regulatory networks.

The validity of the model can be tested by comparing simulation results with time series observations.

Continuous networks

Many components act as input and output of one event act as input of an another input. Example: Cellular differentiation and morphogenesis.

Continuous network models of GRNs are an extension of the boolean networks described above. Nodes still represent genes and connections between them regulatory influences on gene expression. Genes in biological systems display a continuous range of activity levels and it has been argued that using a continuous representation captures several properties of gene regulatory networks not present in the Boolean model. Formally most of these approaches are similar to an artificial neural network, as inputs to a node are summed up and the result serves as input to a sigmoid function, but proteins do often control gene expression in a synergistic, i.e. non-linear, way. However there is now a continuous network model that allows grouping of inputs to a node thus realizing another level of regulation. This model is formally closer to a higher order recurrent neural network. The same model has also been used to mimic the evolution of cellular differentiation and even multicellular morphogenesis.

Stochastic gene networks

It represents stepwise events. Example: transcription, translation

Recent experimental results have demonstrated that gene expression is a stochastic process. Thus, many authors are now using the stochastic formalism, after the work by. Works on single gene expression and small synthetic genetic networks, such as the genetic toggle switch of Tim Gardner and Jim Collins, provided additional experimental data on the phenotypic variability and the stochastic nature of gene expression. The first versions of stochastic models of gene expression involved only instantaneous reactions and were driven by the Gillespie algorithm.

Operon concept is an example to explain gene network.

Gene Density

Gene density refers to the occurrence of more genes in a defined area of genome. It is measured with the help physical map. Gene density, as measured by the number of cDNAs hybridizing to YACs within physical map intervals, varies by a factor of more than two between chromosome clusters and their arms, again with a relatively sharp boundary. The availability of the genome map has allowed investigation of issues such as the rates of recombination per physical distance and gene density across chromosomes.

SEGMENT DUPLICATIONS

Segmental duplications are segments of DNA with near-identical sequence. Segmental duplications (SDs or LCRs) have had roles in creating new primate genes reflected on human genetic variation. In human genome chromosomes Y and 22 have the greatest SDs proportion: 50.4% and 11.9%.

Low copy repeats (also low-copy repeats or LCRs) are highly homologous sequence elements within the eukaryotic genome arising from segmental duplication. They are typically 10–300 kb in length, and bear >95% sequence identity. Though rare in most mammals, LCRs comprise a large portion of the human genome due to a significant expansion during primate evolution. Misalignment of LCRs during non-allelic homologous recombination (NAHR) is an important mechanism underlying the chromosomal microdeletion disorders as well as their reciprocal duplication partners. Many LCRs are concentrated in "hotspots", such as the 17p11-12 region, 27% of which is composed of LCR sequence.

Segments of DNA with near-identical sequence (segmental duplications or duplicons) in the human genome can be hot spots or predisposition sites for the occurrence of non-allelic homologous recombination or unequal crossing-over leading to genomic mutations such as deletion, duplication, inversion or translocation. These structural alterations, in turn, can cause dosage imbalance of genetic material or lead to the generation of new gene products resulting in diseases defined as genomic disorders.

On the basis of the June 2002 (NCBI Build 30) human genome assembly, a total of 107.4 Mb (3.53%) of the human genome content (3,043.1 Mb) were found to be involved in recent segmental duplications by BLAST analysis criteria. This content is composed of more than 1,530 distinct intrachromosomal segmental duplications (80.3 Mb or 2.64% of the total genome) and 1,637 distinct interchromosomal duplications (43.8 Mb or 1.44% of the total genome). In addition, 29% of all duplications are located in unfinished regions of the current genome assembly. Our results are shown using the Generic Genome Browser. We have also found that 38% of the duplications (52.3 Mb) can be considered as tandem duplications - defined here as two related duplicons separated by less than 200 kb.

The size, orientation, and contents of segmental duplications are highly variable and most of them show great organizational complexity. This is perhaps due to successive transposition and rearrangement events leading to the creation of segmental duplications. In many cases, a contiguous duplicon is organized into multiple modules with different orientations and sizes. The characterization of most large segmental duplications is complicated by the fact that many of them (29% of all duplications) are only represented as draft sequences from the current genome assembly.

The segmental duplication map of the human genome should serve as a guide for investigation of the role of duplications in genomic disorders, as well as their contributions to normal human genomic variability.

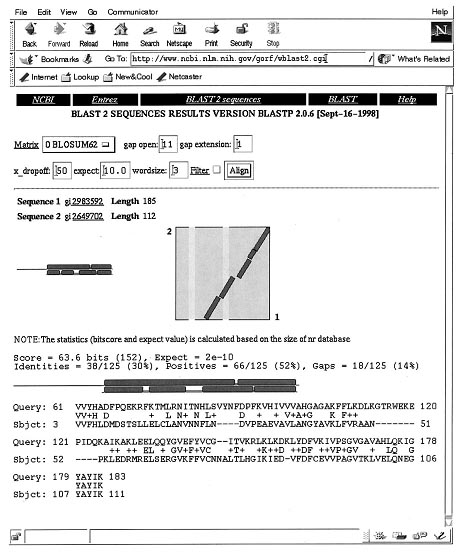

BLAST and detection of segmental duplication

Each BLAST report was sorted by chromosomal coordinates. All identical hits (same coordinate alignments), including suboptimal BLAST alignments recognized by multiple, overlapping alignments, as well as mirror hits (reverse coordinate alignments) from the BLAST results of the intrachromosomal set were removed. Contiguous alignments separated by a distance of less than 3 kb, then 5 kb, and subsequently 9 kb were joined (stepwise) into modules in order to traverse masked repetitive sequences and to overcome breaks in the BLAST alignments caused by insertions/deletions and sequence gaps. Such contiguous sequence alignment modules represent sequence similarity between the subject and query chromosome sequence in question (at their respective positional coordinates). This pairwise sequence comparison procedure serves as a rapid and robust way to detect duplication relationships. However, because of the use of masked sequences, our method would only yield a poor (on average 0.1-0.5 kb) resolution for the determination of the precise duplication alignment boundaries. Results were classified as either duplications or 'questionable' results based on sequencing status of the region and the percent sequence similarity between the detected alignments. Questionable duplications are results that fall within regions containing draft sequences with > 99.5 % detected sequence identity with another region.

TANDEM REPEATS

Tandem repeats occur in DNA when a pattern of two or more nucleotides is repeated and the repetitions are directly adjacent to each other. An example would be: A-T-T-C-G-A-T-T-C-G-A-T-T-C-G in which the sequence A-T-T-C-G is repeated three times. They include three subclasses: satellites, minisatellites and microsatellites.

Satellites

The size of a satellite DNA ranges from 100 kb to over 1 Mb. In humans, a well known example is the alphoid DNA located at the centromere of all chromosomes. Its repeat unit is 171 bp and the repetitive region accounts for 3-5% of the DNA in each chromosome. Other satellites have a shorter repeat unit. Most satellites in humans or in other organisms are located at the centromere.

Minisatellites

The size of a minisatellite ranges from 1 kb to 20 kb. One type of minisatellites is called variable number of tandem repeats (VNTR). Its repeat unit ranges from 9 bp to 80 bp. They are located in non-coding regions. The number of repeats for a given minisatellite may differ between individuals. This feature is the basis of DNA fingerprinting. Another type of minisatellites is the telomere. In a human germ cell, the size of a telomere is about 15 kb. In an aging somatic cell, the telomere is shorter. The telomere contains tandemly repeated sequence GGGTTA.

Microsatellites are also known as short tandem repeats (STR), because a repeat unit consists of only 1 to 6 bp and the whole repetitive region spans less than 150 bp. Similar to minisatellites, the number of repeats for a given microsatellite may differ between individuals. Therefore, microsatellites can also be used for DNA fingerprinting. In addition, both microsatellite and minisatellite patterns can provide information about paternity.

Uses:

Tandem repeat describes a pattern that helps determine an individual's inherited traits.

Tandem repeats can be very useful in determining parentage. Short tandem repeats are used for certain genealogical DNA tests.

DNA is examined from microsatellites within the chromosomal DNA. Minisatellite is another way of saying special regions of the loci. Polymerase chain reaction (or PCR) is performed on the minisatellite areas. The PCR must be performed on each organism being tested. The amplified material is then run through electrophoresis. By checking the percentage of bands that match, parentage is determined.

In the field of Computer Science, tandem repeats in strings (e.g., DNA sequences) can be efficiently detected using suffix trees or suffix arrays.

Studies in 2004 linked the unusual genetic plasticity of dogs to mutations in tandem repeats.

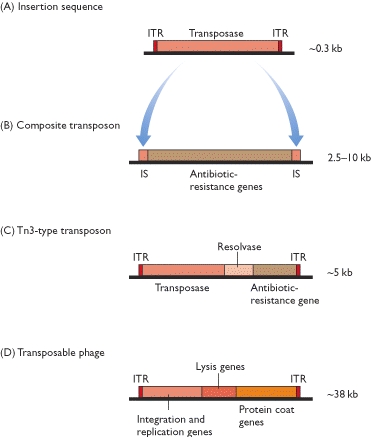

TRANSPOSABLE ELEMENTS

Transposable elements -- also known as transposons or "jumping genes" -- are genes that can move themselves or a copy of themselves from one chromosome to another. Because they can move to different chromosomes, they contribute to the creation of new genes.

Transposable elements (TEs), also known as "jumping genes," are DNA sequences that move from one location on the genome to another. These elements were first identified more than 50 years ago by geneticist Barbara McClintock of Cold Spring Harbor Laboratory in New York. Biologists were initially skeptical of McClintock's discovery. Over the next several decades, however, it became apparent that not only do TEs "jump," but they are also found in almost all organisms (both prokaryotes and eukaryotes) and typically in large numbers. For example, TEs make up approximately 50% of the human genome and up to 90% of the maize genome.

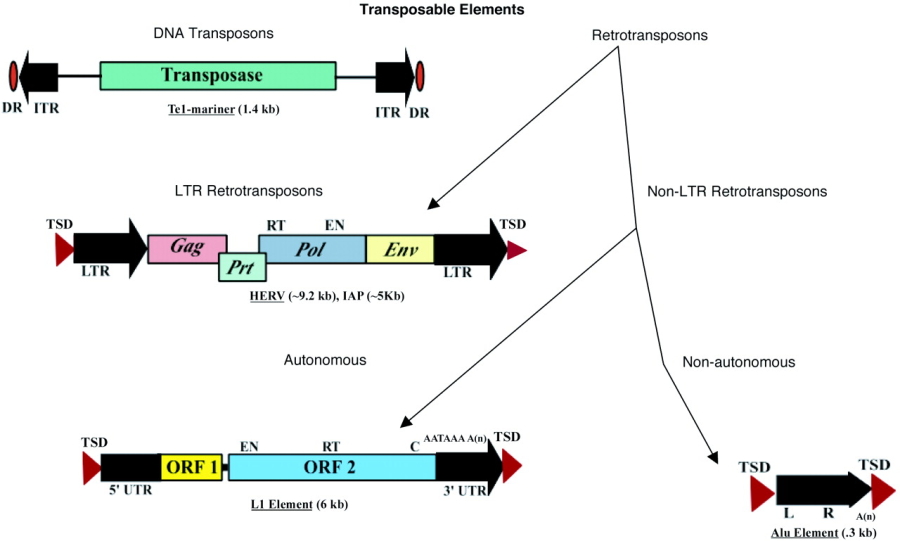

Today, scientists know that there are many different types of TEs, as well as a number of ways to categorize them. One of the more common divisions is between those TEs that require reverse transcription (i.e., the transcription of RNA into DNA) in order to transpose and those that do not. The former elements are known as retrotransposons or class 1 TEs, whereas the latter are known as DNA transposons or class 2 TEs. The Ac/Ds system that McClintock discovered falls in the latter category.

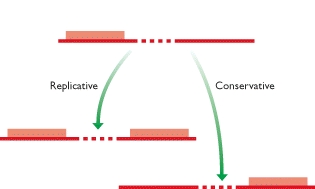

All complete or "autonomous" class 2 TEs encode the protein transposase, which they require for insertion and excision. Some of these TEs also encode other proteins. Note that DNA transposons never use RNA intermediaries—they always move on their own, inserting or excising themselves from the genome by means of a so-called "cut and paste" mechanism.

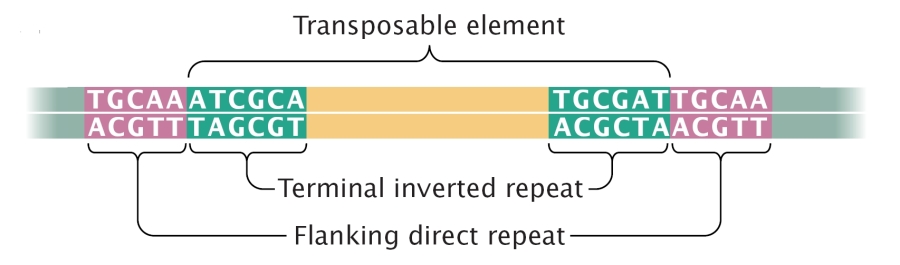

Class 2 TEs are characterized by the presence of terminal inverted repeats, about 9 to 40 base pairs long, on both of their ends. As the name suggests and as Figure 3 shows, terminal inverted repeats are inverted complements of each other; for instance, the complement of ACGCTA (the inverted repeat on the right side of the TE in the figure) is TGCGAT (which is the reverse order of the terminal inverted repeat on the left side of the TE in the figure). One of the roles of terminal inverted repeats is to be recognized by transposase.

In addition, all TEs in both class 1 and class 2 contain flanking direct repeats (Figure 3). Flanking direct repeats are not actually part of the transposable element; rather, they play a role in insertion of the TE. Moreover, after a TE is excised, these repeats are left behind as "footprints." Sometimes, these footprints alter gene expression (i.e., expression of the gene in which they have been left behind) even after their related TE has moved to another location on the genome.

Less than 2% of the human genome is composed of class 2 TEs. This means that the majority of the substantial portion of the human genome that is mobile consists of the other major class of TEs—the retrotransposons.

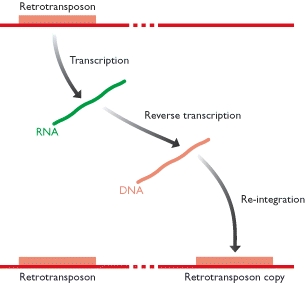

Unlike class 2 elements, class 1 elements—also known as retrotransposons—move through the action of RNA intermediaries. In other words, class 1 TEs do not encode transposase; rather, they produce RNA transcripts and then rely upon reverse transcriptase enzymes to reverse transcribe the RNA sequences back into DNA, which is then inserted into the target site.

Both class 1 and class 2 TEs can be either autonomous or nonautonomous. Autonomous TEs can move on their own, while nonautonomous elements require the presence of other TEs in order to move. This is because nonautonomous elements lack the gene for the transposase or reverse transcriptase that is needed for their transposition, so they must "borrow" these proteins from another element in order to move. Ac elements, for example, are autonomous because they can move on their own, whereas Ds elements are nonautonomous because they require the presence of Ac in order to transpose.

Applications

The first TE was discovered in the plant maize (Zea mays, corn species), and is named dissociator (Ds). Likewise, the first TE to be molecularly isolated was from a plant (Snapdragon). Appropriately, TEs have been an especially useful tool in plant molecular biology. Researchers use them as a means of mutagenesis. In this context, a TE jumps into a gene and produces a mutation. The presence of such a TE provides a straightforward means of identifying the mutant allele, relative to chemical mutagenesis methods.

Sometimes the insertion of a TE into a gene can disrupt that gene's function in a reversible manner, in a process called insertional mutagenesis; transposase-mediated excision of the DNA transposon restores gene function. This produces plants in which neighboring cells have different genotypes. This feature allows researchers to distinguish between genes that must be present inside of a cell in order to function (cell-autonomous) and genes that produce observable effects in cells other than those where the gene is expressed.

TEs are also a widely used tool for mutagenesis of most experimentally tractable organisms. The Sleeping Beauty transposon system has been used extensively as an insertional tag for identifying cancer genes

The Tc1/mariner-class of TEs Sleeping Beauty transposon system, awarded as the Molecule of the Year 2009 is active in mammalian cells and are being investigated for use in human gene therapy.

PSEUDOGENES

Pseudogenes are dysfunctional relatives of known genes that have lost their protein-coding ability or are otherwise no longer expressed in the cell. Although some do not have introns or promoters (these pseudogenes are copied from mRNA and incorporated into the chromosome and are called processed pseudogenes), most have some gene-like features (such as promoters, CpG islands, and splice sites), they are nonetheless considered nonfunctional, due to their lack of protein-coding ability resulting from various genetic disablements (stop codons, frameshifts, or a lack of transcription) or their inability to encode RNA (such as with rRNA pseudogenes). Thus the term, coined in 1977 by Jacq, et al., is composed of the prefix pseudo, which means false, and the root gene, which is the central unit of molecular genetics.

Because pseudogenes are generally thought of as the last stop for genomic material that is to be removed from the genome, they are often labeled as junk DNA. Nonetheless, pseudogenes contain fascinating biological and evolutionary histories within their sequences. This is due to a pseudogene's shared ancestry with a functional gene: in the same way that Darwin thought of two species as possibly having a shared common ancestry followed by millions of years of evolutionary divergence, a pseudogene and its associated functional gene also share a common ancestor and have diverged as separate genetic entities over millions of years.

Properties of pseudogenes

Pseudogenes are characterized by a combination of homology to a known gene and nonfunctionality. That is, although every pseudogene has a DNA sequence that is similar to some functional gene, they are nonetheless unable to produce functional final products (nonfunctionality). Pseudogenes are quite difficult to identify and characterize in genomes, because the two requirements of homology and nonfunctionality are implied through sequence calculations and alignments rather than biologically proven.

Types of pseudogenes

There are three main types of pseudogenes, all with distinct mechanisms of origin and characteristic features. The classifications of pseudogenes are as follows:

Pseudogenes can complicate molecular genetic studies. For example, a researcher who wants to amplify a gene by PCR may simultaneously amplify a pseudogene that shares similar sequences. This is known as PCR bias or amplification bias. Similarly, pseudogenes are sometimes annotated as genes in genome sequences. Processed pseudogenes often pose a problem for gene prediction programs, often being misidentified as real genes or exons. It has been proposed that identification of processed pseudogenes can help improve the accuracy of gene prediction methods. It has also been shown that the parent sequences that give rise to processed pseudogenes lose their coding potential faster than those giving rise to non-processed pseudogenes.

Noncoding conservation

Whole genome comparisons between species that are either closely related or more phylogenetically separated—such as human, mouse, and dog—have revealed a subset of sequences that neither code for proteins nor are transcribed, yet are nevertheless conserved, but whose function is as yet unknown (conserved nongenic sequences, or CNGs). Emmanouil Dermitzakis and colleagues at the University of Geneva employed data-mining techniques to examine the extent to which these CNGs are spread across 14 mammalian species. In the October 2 Science, they report that CNGs are highly conserved in 14 species and constitute a new class of sequences that are even more evolutionarily constrained than protein coding regions (Science, DOI:10.1126/science.1087047, October 2, 2003).

In addition to protein coding sequence, the human genome contains a significant amount of regulatory DNA, the identification of which is proving somewhat recalcitrant to both in silico^ and functional methods. An approach that has been used with some success is comparative sequence analysis, whereby equivalent genomic regions from different organisms are compared in order to identify both similarities and differences. In general, similarities in sequence between highly divergent organisms imply functional constraint. We have used a whole-genome comparison between humans and the pufferfish, Fugu rubripes, to identify nearly 1,400 highly conserved non-coding sequences. Given the evolutionary divergence between these species, it is likely that these sequences are found in, and furthermore are essential to, all vertebrates. Most, and possibly all, of these sequences are located in and around genes that act as developmental regulators. Some of these sequences are over 90% identical across more than 500 bases, being more highly conserved than coding sequence between these two species. Despite this, we cannot find any similar sequences in invertebrate genomes. In order to begin to functionally test this set of sequences, we have used a rapid in vivo assay system using zebrafish embryos that allows tissue-specific enhancer activity to be identified. Functional data is presented for highly conserved non-coding sequences associated with four unrelated developmental regulators (SOX21, PAX6, HLXB9, and SHH), in order to demonstrate the suitability of this screen to a wide range of genes and expression patterns. Of 25 sequence elements tested around these four genes, 23 show significant enhancer activity in one or more tissues. We have identified a set of non-coding sequences that are highly conserved throughout vertebrates. They are found in clusters across the human genome, principally around genes that are implicated in the regulation of development, including many transcription factors. These highly conserved non-coding sequences are likely to form part of the genomic circuitry that uniquely defines vertebrate development.

Identification and characterisation of cis-regulatory regions within the non-coding DNA of vertebrate genomes remain a challenge for the post-genomic era. The idea that animal development is controlled by cis-regulatory DNA elements (such as enhancers and silencers) is well established and has been elegantly described in invertebrates such as Drosophila and the sea urchin. These elements are thought to comprise clustered target sites for large numbers of transcription factors and collectively form the genomic instructions for developmental gene regulatory networks (GRNs). However, relatively little is known about GRNs in vertebrates. Any approach to elucidate such networks necessitates the discovery of all constituent cis-regulatory elements and their genomic locations. Unfortunately, initial in silico identification of such sequences is difficult, as current knowledge of their syntax or grammar is limited. By contrast, computational approaches for protein-coding exon prediction are well established, based on their characteristic sequence features, evolutionary conservation across distant species, and the availability of cDNAs and expressed sequence tags (ESTs), which greatly facilitate their annotation.

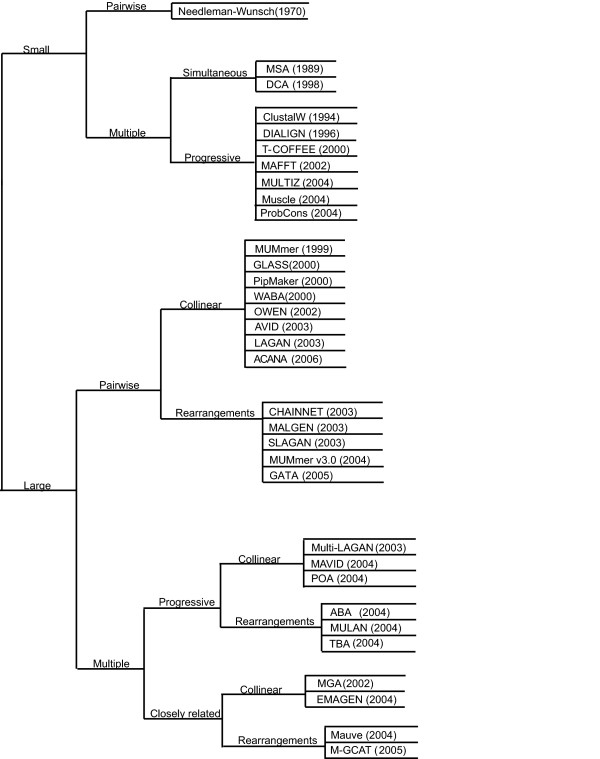

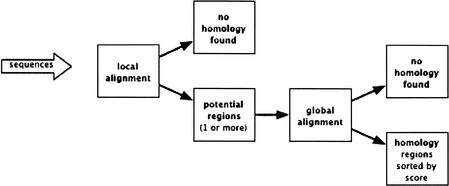

The completion of a number of vertebrate genome sequences, as well as the concurrent development of genomic alignment, visualisation, and analytical bioinformatics tools, has made large genomic comparisons not only possible but an increasingly popular approach for the discovery of putative cis-regulatory elements. Comparing DNA sequences from different organisms provides a means of identifying common signatures that may have functional significance. Alignment algorithms optimise these comparisons so that slowly evolving regions can be anchored together and highlighted against a background of more rapidly evolving DNA that is free of any functional constraints.

Another highly successful approach to increasing the resolving power of comparative analyses is to use multi-species alignments combining both closely related and highly divergent organisms. By using large evolutionary distances, even the slowest-evolving neutral DNA has reached equilibrium, thereby significantly improving the signal to noise ratio in genomic alignments. Although non-coding sequences generally lack sequence conservation between highly divergent species, there are a number of striking examples where comparison between human and pufferfish (Fugu rubripes) gene regions has readily identified highly conserved non-coding sequences that have been shown to have some function in vivo. Humans and Fugu last shared a common ancestor around 450 million years ago, predating the emergence of the majority of all extant vertebrates, implying that any non-coding sequences conserved between these two species are likely to be fundamental to vertebrate life. The Fugu genome has the added advantage of being highly compact, reducing intronic and intergenic distances almost 10-fold. Without exception, all reported examples of non-coding conservation between these two species have been associated with genes that play critical roles in development. This suggests that some aspects of developmental regulation are common to all vertebrates and that whole-genome comparisons may be particularly powerful in identifying regulatory networks of this kind.

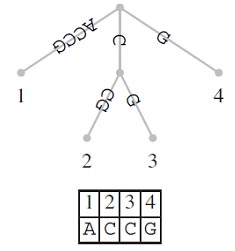

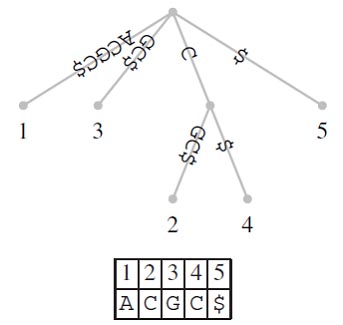

SUFFIX TREE

Molecular Biologists often search for sequence patterns in molecular genetic data. Consider for example the text t= ACCGTC and the pattern P= C. A string search should tell us as efficiently as possible that occurs in at positions 2, 3, and 6. Classical results in computer science have lead to algorithms that achieve this in time proportional to the length of T.

Suffix trees are an ideal data structure for improving on this result in cases where the text is stable and needs to be searched repeatedly. Suffix trees are an index structure of the text, which can be built in linear time [249]. Once built, the text can be searched for a pattern in time proportional to the length of the pattern rather than in time proportional to the length of the text.

A suffix tree (t) is a special kind of tree associated with a string S, where |s| = n. has as many leaves as the string has characters and these leaves are labeled 1,….n. Each edge in the tree is labeled with a substring of S. The defining feature of the suffix tree is that by concatenating the strings from the root along the edges leading to leaf i, the suffix S[i…n] is obtained. Figure shows a suffix tree for S= ACCG. If you start reading at its root and follow the path leading to leaf 2, you obtain the string CCG. This is the suffix that starts at position 2 in S.

Notice that it is possible that a suffix is a prefix of another suffix. For example, in S=ACGC the suffix S[4..4]=C is a prefix of the suffix S[2..4]=CGC. In this case, no suffix tree corresponding to S exists, as the path labeled S [4..4] would not end at a leaf. A simple method for guaranteeing the existence of a suffix tree is to ensure that the last letter of S does not occur anywhere else in S. In practice, a sentinel or terminator symbol, often denoted as $ is added to S and T is constructed from S$.

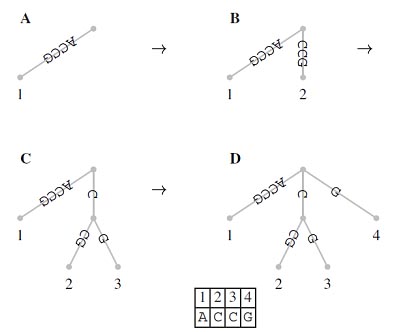

Suffix Tree Construction

Suffix trees are usually constructed through a series of intermediate trees. One possible naıve algorithm proceeds as follows: Construct a root and a leaf node labeled 1 . The edge connecting these two nodes is labeled S[1..n]. This initial tree is converted to the final structure by successively fitting the suffices, S[1..n], 1<i<n, into the intermediate trees. As demonstrated in Figure, each of these fitting steps proceeds by matching the suffix along the edge labels starting at the root until a mismatch is encountered. Remember that due to the sentinel character a mismatch is guaranteed to occur. Once this is found, there are two possibilities:

1. The match ends at the last letter of an edge label leading to node v. In this case, an edge labeled with the mismatched part of the suffix is attached to v. This edge leads to a leaf labeled with the start position of the current suffix. Examples of this are steps Aà B and Cà D in Figure.

2. The match ends inside an edge label. In this case, an interior node v is inserted just behind the end of the match. In addition, a leaf labeled i is attached to v via an edge labeled with the mismatched part of the suffix. An example of this splitting of an edge is step Bà C in Figure.

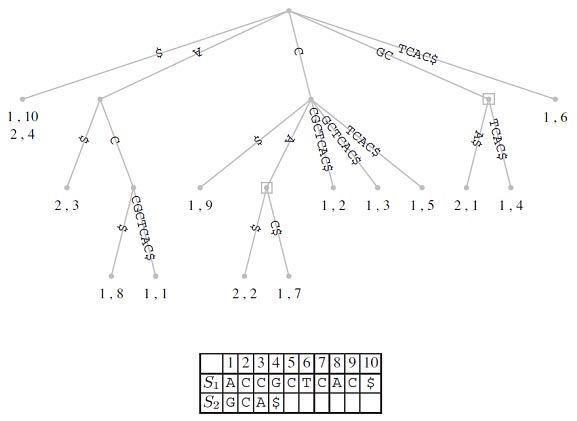

generalized suffix tree

So far, we have constructed suffix trees just from a single string. However, it is straight forward to generate what is known as a generalized suffix tree representing more than one string. Consider the two strings S1 and S2. We can proceed by first constructing the suffix tree for S1 and then inserting into this every suffix of S2. All we need to do is change the leaf labels such that they now consist of pairs of numbers identifying both the string of origin and the position within that string. As an example, consider S1=ACCGCTCAC; the corresponding suffix tree is shown in Figure. If we insert into this suffix tree S2=GCA, we obtain the tree topology shown in Figure. Using the new labeling scheme for leaves, generalized suffix trees of an arbitrary number of strings can be constructed. This opens the way for solving the longest common substring problem.

This has important biological applications where unique and repeat sequences play a central role in many fundamental as well as biotechnological problems. Finally, suffix trees can also be used for rapid inexact string matching, where <=k, mismatches between P and its occurrence in T are allowed.

Genome Anatomy

Genome anatomy is the structure of all the genetic information in the haploid portion of chromosomes of a cell. Biologists recognize that the living world comprises two types of organism:

1. Eukaryotes, whose cells contain membrane-bound compartments, including a nucleus and organelles such as mitochondria and, in the case of plant cells, chloroplasts. Eukaryotes include animals, plants, fungi and protozoa.

2. Prokaryotes whose cells were lack extensive internal compartments. There are two very different groups of prokaryotes, distinguished from one another by characteristic genetic and biochemical features:

a. the bacteria, which include most of the commonly encountered prokaryotes such as the gram-negatives (e.g. E. coli), the gram-positives (e.g. Bacillus subtilis), the cyanobacteria (e.g. Anabaena) and many more;

b. the archaea, which are less well-studied, and have mostly been found in extreme environments such as hot springs, brine pools and anaerobic lake bottoms.

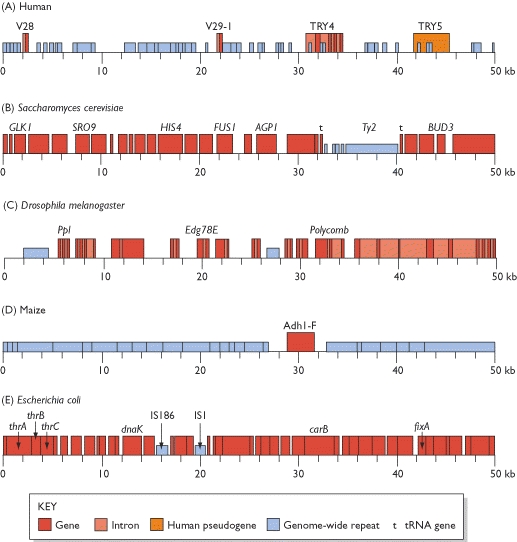

Genome anatomy comparison

The picture that emerges is that the genetic organization of the yeast genome is much more economical than that of the human version. The genes themselves are more compact, having fewer introns, and the spaces between the genes are relatively short, with much less space taken up by genome-wide repeats and other non-coding sequences. The hypothesis that more complex organisms have less compact genomes holds when other species are examined. If we agree that a fruit fly is more complex than a yeast cell but less complex than a human then we would expect the organization of the fruit-fly genome to be intermediate between that of yeast and humans. The 50-kb segment of the fruit-fly genome having 11 genes, that is more than in the human segment but fewer than in the yeast sequence. All of these genes are discontinuous, but seven have just one intron each. The gene density in the fruit-fly genome is intermediate between that of yeast and humans, and the average fruit-fly gene has many more introns than the average yeast gene but still three times fewer than the average human gene.

The comparison between the yeast, fruit-fly and human genomes also holds true when we consider the genome-wide repeats. These make up 3.4% of the yeast genome, about 12% of the fruit-fly genome, and 44% of the human genome. It is beginning to become clear that the genome-wide repeats play an intriguing role in dictating the compactness or otherwise of a genome. This is strikingly illustrated by the maize genome, which at 5000 Mb is larger than the human genome but still relatively small for a flowering plant. Only a few limited regions of the maize genome have been sequenced, but some remarkable results have been obtained, revealing a genome dominated by repetitive elements. The figure shows a 50-kb segment of this genome, either side of one member of a family of genes coding for the alcohol dehydrogenase enzymes. This is the only gene in this 50-kb region, although there is a second one, of unknown function, approximately 100 kb beyond the right-hand end of the sequence shown here. Instead of genes, the dominant feature of this genome segment is the genome-wide repeats. The majority of these are of the LTR element type, which comprise virtually all of the non-coding part of the segment, and on their own are estimated to make up approximately 50% of the maize genome. It is becoming clear that one or more families of genome-wide repeats have undergone a massive proliferation in the genomes of certain species.

The c-value paradox is the non-equivalence between genome size and gene number that is seen when comparisons are made between some eukaryotes. A good example is provided by Amoeba dubia which, being a protozoan, might be expected to have a genome of 100–500 kb, similar to other protozoa such as Tetrahymena pyriformis. In fact the Amoeba genome is over 200 000 Mb. Similarly, we might guess that the genomes of crickets are similar in size to those of other insects, but these bugs have genomes of approximately 2000 Mb, 11 times that of the fruit fly.

Eukaryotes genomes:

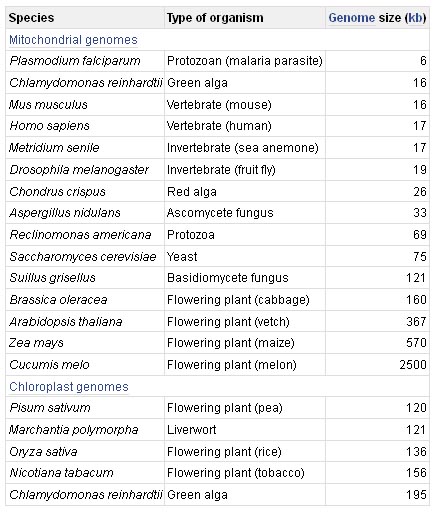

Humans are fairly typical eukaryotes and the human genome is in many respects a good model for eukaryotic genomes in general. All of the eukaryotic nuclear genomes that have been studied are, like the human version, divided into two or more linear DNA molecules, each contained in a different chromosome; all eukaryotes also possess smaller, usually circular, mitochondrial genomes. The only general eukaryotic feature not illustrated by the human genome is the presence in plants and other photosynthetic organisms of a third genome, located in the chloroplasts.

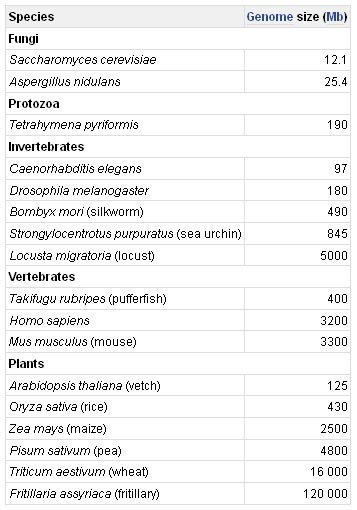

Although the basic physical structures of all eukaryotic nuclear genomes are similar, one important feature is very different in different organisms. This is genome size, the smallest eukaryotic genomes being less than 10 Mb in length, and the largest over 100 000 Mb. As can be seen in table, this size range coincides to a certain extent with the complexity of the organism, the simplest eukaryotes such as fungi having the smallest genomes, and higher eukaryotes such as vertebrates and flowering plants having the largest ones. This might appear to make sense as one would expect the complexity of an organism to be related to the number of genes in its genome - higher eukaryotes need larger genomes to accommodate the extra genes. However, the correlation is far from precise: if it was, then the nuclear genome of the yeast S. cerevisiae, which at 12 Mb is 0.004 times the size of the human nuclear genome, would be expected to contain 0.004 × 35 000 genes, which is just 140. In fact the S. cerevisiae genome contains about 5800 genes.

The yeast genome segment, which comes from chromosome III (the first eukaryotic chromosome to be sequenced), has the following distinctive features:

· It contains more genes than the human segment. This region of yeast chromosome III contains 26 genes thought to code for proteins and two that code for transfer RNAs (tRNAs), short non-coding RNA molecules involved in reading the genetic code during protein synthesis.

· Relatively few of the yeast genes are discontinuous. In this segment of chromosome III none of the genes are discontinuous. In the entire yeast genome there are only 239 introns, compared with over 300 000 in the human genome.

· There are fewer genome-wide repeats. This part of chromosome III contains a single long terminal repeat (LTR) element, called Ty2, and four truncated LTR elements called delta sequences. These five genome-wide repeats make up 13.5% of the 50-kb segment, but this figure is not entirely typical of the yeast genome as a whole. When all 16 yeast chromosomes are considered, the total amount of sequence taken up by genome-wide repeats is only 3.4% of the total. In humans, the genome-wide repeats make up 44% of the genome.

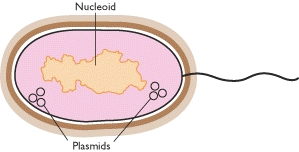

Prokaryotic genomes

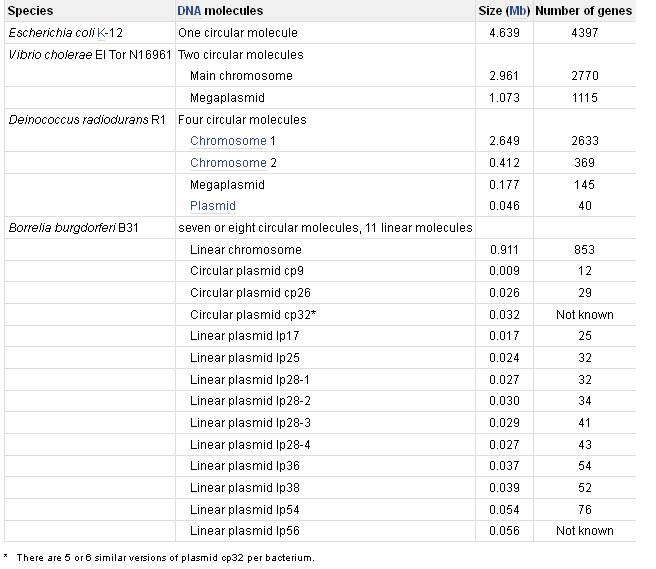

Prokaryotic genomes are very different from eukaryotic ones. There is some overlap in size between the largest prokaryotic and smallest eukaryotic genomes, but on the whole prokaryotic genomes are much smaller. For example, the E. coli K12 genome is just 4639 kb, two-fifths the size of the yeast genome, and has only 4405 genes. The physical organization of the genome is also different in eukaryotes and prokaryotes. The traditional view has been that an entire prokaryotic genome is contained in a single circular DNA molecule. As well as this single ‘chromosome’, prokaryotes may also have additional genes on independent smaller, circular or linear DNA molecules called plasmids. Genes carried by plasmids are useful, coding for properties such as antibiotic resistance or the ability to utilize complex compounds such as toluene as a carbon source, but plasmids appear to be dispensable - a prokaryote can exist quite effectively without them. We now know that this traditional view of the prokaryotic genome has been biased by the extensive research on E. coli, which has been accompanied by the mistaken assumption that E. coli is a typical prokaryote. In fact, prokaryotes display a considerable diversity in genome organization, some having a unipartite genome, like E. coli, but others being more complex. Borrelia burgdorferi B31, for example, has a linear chromosome of 911 kb, carrying 853 genes, accompanied by 17 or 18 linear and circular molecules, which together contribute another 533 kb and at least 430 genes. Multipartite genomes are now known in many other bacteria and archaea.

In one respect, E. coli is fairly typical of other prokaryotes. After our discussion of eukaryotic gene organization, it will probably come as no surprise to learn that prokaryotic genomes are even more compact than those of yeast and other lower eukaryotes. It is immediately obvious that there are more genes and less space between them, with 43 genes taking up 85.9% of the segment. Some genes have virtually no space between them: thrA and thrB, for example, are separated by a single nucleotide, and thrC begins at the nucleotide immediately following the last nucleotide of thrB. These three genes are an example of an operon, a group of genes involved in a single biochemical pathway (in this case, synthesis of the amino acid threonine) and expressed in conjunction with one another. Operons have been used as model systems for understanding how gene expression is regulated. In general, prokaryotic genes are shorter than their eukaryotic counterparts, the average length of a bacterial gene being about two-thirds that of a eukaryotic gene, even after the introns have been removed from the latter. Bacterial genes appear to be slightly longer than archaeal ones.

Anatomy of eukaryotic genome

The human genome is split into two components: the nuclear genome and the mitochondrial genome. This is the typical pattern for most eukaryotes, the bulk of the genome being contained in the chromosomes in the cell nucleus and a much smaller part located in the mitochondria and, in the case of photosynthetic organisms, in the chloroplasts.

Eukaryotic nuclear genome